Category Archives: Data Quality

All data is not created equal.

Last week Toyota announced that they would end production on the Scion brand of cars. Thirteen years ago Toyota launched Scion because their data showed that small cars were a good bet. Times were different in 2003. The US was still adapting to a post 9/11 world. Gas prices were on the rise and the economy was recovering. Jobs were good for college graduates and Toyota wanted to offer a product that 20 somethings could afford, and would be a good fit for their lifestyle. Their data suggested that Scion was a good investment.  For years Scion made money and did decently. In 2007 the economy took another tumble. The middle class got hit really hard. This caused Scion to lose more sales because their target demographic took the biggest hit financially because of the economic down turn. Eventually Toyota, by teaming with Subaru to build the FR-S/BRZ, strived to change public opinion of Scion as a small inexpensive car brand for the 20 something crowd. Even after these efforts Scion failed to see much improvement in sales. While no company can see the future, if Toyota had collected more data, and adapted Scion’s business plan to include a small SUV when gas prices started to drop, or planned on adapting their small cars to accommodate two adults and a child seat comfortably, then they may have been able to increase their market share in the US. In the end Toyota chose to cut their losses and move on.

For years Scion made money and did decently. In 2007 the economy took another tumble. The middle class got hit really hard. This caused Scion to lose more sales because their target demographic took the biggest hit financially because of the economic down turn. Eventually Toyota, by teaming with Subaru to build the FR-S/BRZ, strived to change public opinion of Scion as a small inexpensive car brand for the 20 something crowd. Even after these efforts Scion failed to see much improvement in sales. While no company can see the future, if Toyota had collected more data, and adapted Scion’s business plan to include a small SUV when gas prices started to drop, or planned on adapting their small cars to accommodate two adults and a child seat comfortably, then they may have been able to increase their market share in the US. In the end Toyota chose to cut their losses and move on.

What can be learned from these business decisions? While lots of data can be collected through several sources, the strength of that data needs to be weighted. In addition the quality and source of the data are important aspects to be considered too. In the example of Scion, maybe Toyota could have given the lowering gas prices more importance and built larger vehicles to accommodate more people comfortably and traded off for a lower MPG. Or they could have focused on the losses the middle class were experiencing and built a luxury sedan that focused on a higher income demographic. In the end, they may have feared competing too closely with their other two automobile brands and just kept doing the same thing hoping for the best.

Self Service Analytics

Recently articles about data analytics have pointed to the need for products that are designed for the Self-Service user. Historically data analytics have been done by data professionals or IT personnel with temperamental, complicated tools. In a recent article by Daniel Gutierrez, discussing the future of data analytics one point that he brings up is the changing trends and benefits of self-service platforms that enable the everyday user to function as data analysts.

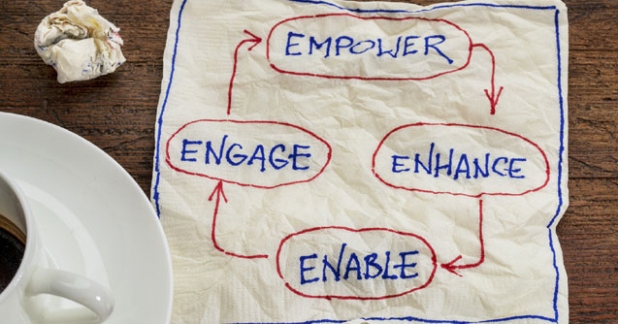

Many companies have built visually appealing and informative features into their software trying to empower less-experienced users to easily analyze and identify trends in their data with the goal of helping them derive new strategies for business. This can be helpful at times, but sometimes just makes things even more difficult to understand. While it is good to enable business users to analyze data, if the data is corrupted or out of date users are still left in the dark. By building queries on a foundation of accurate data and addressing data quality initially users are able to reach more accurate conclusions. This is why at Aim-Smart, we believe that giving business/self-service users the power to match, deduplicate and verify the quality of data is a necessary first step for their data analysis.

By creating a tool with the goal of achieving data quality in Excel, Business/Self-Service users are able to clean, match and deduplicate data in a familiar environment. This decreases the learning curve of a new product for less experienced users. In addition it allows users to manipulate the data by using the existing Excel features they are familiar with. By allowing users to build their queries on a clean data foundation they can reach conclusions with greater accuracy. Another benefit is that this removes the need to move data back and forth with IT personnel for cleansing and verification, this reduces possible data corruption points.

Is your company ready for the future of data management? Have you taken the steps necessary to empower your employees to make business more successful?

Helping small and medium sized businesses compete with large business analytics.

With the New Year upon us it is a great time to assess where business can become more efficient. The ability business has to process analytics is expanding faster and faster. Thanks to modern computers and advanced software, interested parties can collect and evaluate more customer data faster than ever before. Businesses have to be cautious though. There are holes that cause issues with the results data supplies, if that data isn’t as correct as possible. Since the economy crash a few years ago, an increasing number of customers have rapidly changing information. Many people who were once home owners, now rent, and move with increased frequency. Many are changing phones plans and phone numbers. Others are getting married or pass away. There are far fewer constants today than in the past. As companies collect more data, it becomes more difficult to verify if their data acquired in the past is accurate. This creates multiple records for the same customer with varying levels of accuracy. These records not only fill up company databases, but keeping incorrect data wastes company resources. Large companies employ professional companies or firms, or they use department resources to monitor their data to and remove inaccurate information.

With the New Year upon us it is a great time to assess where business can become more efficient. The ability business has to process analytics is expanding faster and faster. Thanks to modern computers and advanced software, interested parties can collect and evaluate more customer data faster than ever before. Businesses have to be cautious though. There are holes that cause issues with the results data supplies, if that data isn’t as correct as possible. Since the economy crash a few years ago, an increasing number of customers have rapidly changing information. Many people who were once home owners, now rent, and move with increased frequency. Many are changing phones plans and phone numbers. Others are getting married or pass away. There are far fewer constants today than in the past. As companies collect more data, it becomes more difficult to verify if their data acquired in the past is accurate. This creates multiple records for the same customer with varying levels of accuracy. These records not only fill up company databases, but keeping incorrect data wastes company resources. Large companies employ professional companies or firms, or they use department resources to monitor their data to and remove inaccurate information.

We know that large companies are greatly affected by incorrect data. Poor data has caused several large companies to make decisions that eventually removed them from the current market place. Companies like Kodak, RCA, and Motorola who were once Fortune 500 companies are no longer in business. If large companies can be ruined by poor decisions, what does that say about the need for small and medium sized companies to base business decision on accurate data?

In today’s business environment small and medium businesses struggle from limited access to effective tools for dealing with data. Big businesses can afford expensive software products, making it difficult for small and medium sized business to compete. But what about the little guy? This is where Aim-Smart comes in. Aim-Smart is a powerful Excel data quality solution for small and medium sized companies. Not only is it fairly priced, it allows small/medium businesses to leverage best-in-class data matching, address validation, and data analysis within Excel.

What will your company do this year to increase data quality?

Excel Address Verification is Here!

I’m happy to announce that Excel Address Verification is now available in the US! You can use it to easily ensure address deliverability, improve data quality, improve matching, and also certify your mail with CASS.

What is CASS?

USPS ZIP + 4®CASS™ certification avoids undeliverable mail resulting from bad or poor-quality addresses. Each year the US alone handles over 1.7 billion addresses that can’t be delivered because of missing data. The cost to the USPS is exorbitant – a jaw-dropping $159 million. Consequently, the US Postal Service implemented a program to incentivize companies to send only “good” mail.

USPS requires all CASS Certified™ vendors, such as Aim-Smart, to perform DPV and LACSLink processing when correcting an address. We do this work behind the scenes without requiring anything additional from the user.

What is DPV?

DPV ensures your mailing list has deliverable addresses. Whereas typical address correction processes only match an address to a range of valid addresses, DPV verifies that a mailing address is a known USPS delivery point.

To illustrate, suppose we had an address such as 456 Springfield Blvd. Standard address correction procedures might indicate that the address is valid, thereby assigning a Zip+4 value, when all the processor really did was make sure that 400-500 was a valid range for Springfield Blvd. In reality though, there might not be a 456 Springfield Blvd. This type of situation is avoided with DPV. Aim-Smart’s address verification for Excel will report back an error code and corresponding message indicating that the address is not deliverable.

What is LACSLink?

Many local governments are assigning new addresses to rural routes to improve the ability of emergency services (firefighters, police, etc.) to find addresses more quickly. You can imagine that it could be confusing to find an address like this one: 109506 County Road 1. As these addresses get renumbered, street names added, etc. it’s helpful for the USPS to have the updated address to deliver mail more efficiently. This is what LACSLink does – provide the lookup between the rural route address and its updated counterpart. As with DPV, this happens behind the scenes automatically for the user.

More information on DPV and LACSLink can be found at:

https://www.usps.com/business/manage-address-quality.htm

http://zip4.usps.com/ncsc/addressmgmt/dpv.htm

http://zip4.usps.com/ncsc/addressservices/addressqualityservices/lacsystem.htm

South African Support

We recently added South African support to Aim-Smart. Now users can parse ZA addresses, phone numbers, match names using fuzzy logic, and perform other data quality functions within Excel.

One of the most complicated features to implement was the ability to parse full South African addresses, given that the format is not always consistent. We find that often addresses don’t specify the city, but rather only the suburb; however, this is not always the case – sometimes both are present and sometimes only the city is specified. Most often the province is not specified, but even then that’s not always true either. Here are some example addresses:

Waterfront Drive Knysna, South Africa 6571

Old Rustenburg Road Magaliesburg, South Africa 1791

Summit Place Precinct (corner of N1 North and Garsfontein off ramp), 213 Thys St Menlyn, Pretoria, Gauteng 0181

277 Main Rd, Sandton, South Africa

Johannes Road Randburg, South Africa

Porterfield Rd Cape Town, South Africa

As you can see, the format varies significantly; however, the new engine can handle most of the formats we found and does a great job matching addresses.

For gender guessing, we are able to guess gender, but we don’t have the statistical probabilities that exist in the US because we weren’t able to find a data source that allowed us to determine probability.

As with other countries, adding ZA support is very reasonably priced. Good luck!

Why is data cleansing important to companies?

The U.S. economy wastes an estimated $3 Trillion per year due to incorrect, inconsistent, fraudulent and redundant data. Businesses incur a very large portion of this cost. The Data Warehousing Institute estimate that the total cost to U.S. business more than $600 Billion a year from bad data alone. That is a very large cost that can easily be reduced by investing a much smaller amount of money earlier business data processing. No business is immune to financial loss from lost sales or extra expenses caused by incorrect data. However businesses are able to limit these losses through proper data cleansing.

The rule of 1:10:100 by W. Edwards Deming says that it takes $1 to verify a record when it is first entered, $10 to clean or deduplicate bad data after entry, and $100 per record if nothing is done to rectify a data issue. Part of this cost is incurred due to mail cost. The average company wastes $180,000 per year on direct mail that does not reach the intended recipient due to inaccurate data. Poorly marketed advertisements to incorrect demographics are another expense of having poor or out of date information.

The most common issues found due to data quality are duplicate and old records. Within one month of receiving customer data 2% becomes incorrect due to moving, death, marriage or divorce. If you are receiving data from an outside source, i.e. other departments, other businesses, these sources may not know how old the records are, if imported data is several months old 10% or more may be inaccurate. By having tools in house, companies can limit the level of incorrect data they deal with. An issues with in house data cleaning is that IT departments are often heavily relied on for that process. IT professionals have expert knowledge in technical issues, but often lack in-depth data processing best practices. Usually companies employ data analysis experts, but again, they often lack in-depth IT knowledge. The strength of utilizing business data is dependent on these professionals ability to function at their best. Often there is time lost or wasted due to lack of clear communication between these two departments. In addition lack of understanding causes incorrect information to be returned and can result in having to redo various aspects of the process.

What does Aim-Smart do to eliminate these issues? Aim-Smart is built with the business user, analysis expert and IT personal in mind. With Aim-Smart business users and analysis experts are able to manipulate data with the speed and accuracy of IT professionals and use their knowledge of advanced data processing techniques to remove or update data as needed. This is done all with in Excel, a comfortable environment for any business professional. This allows the IT professional to focus on the upkeep of the data platforms and focus on maintaining the platforms for data storage. I also removes many opportunities for misunderstanding and communication breakdown. It is estimated that up to 50% of IT expenses are spent on data cleaning. Aim-Smart removes most of the data cleaning process from the IT department. By putting the power of data cleaning in the hands of the business user, IT demands drop and allows the IT professionals to focus on strengthening and updating internal systems within the company. Aim-Smart allows business users to remove data, or update records they find to be out of date. It also allows them to manipulate the data as they want through parsing, standardization and other features. By doing this, company marketing budgets can more effectively be spent on contacts or data results that are accurate. This helps to maximize the use of company money spent on advertising as well as other expenses.

Source:

“$3 Trillion Problem: Three Best Practices for Today’s Dirty Data Pandemic” by Hollis Tibbetts