How to Smart Match Despite Data Quality Differences

One of the challenges matching involves inconsistent data quality between the sources involved in matching. Often times, users are trying to match one input against a “master” source, such as a CRM system, Master Data Management (MDM) system, etc. In these cases the master’s data quality is typically high. On the other hand, the input data source is often sporadically populated, and generally of poorer data quality.

Aim-Smart can obviously help identify the problems in the source data and also standardize the data in either source so that the formatting is consistent. The next issue is how to match data between the two sources given the differences in quality.

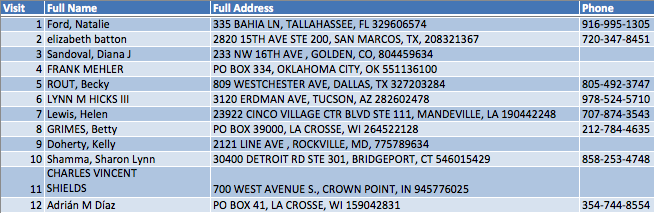

The input data source might look something like this:

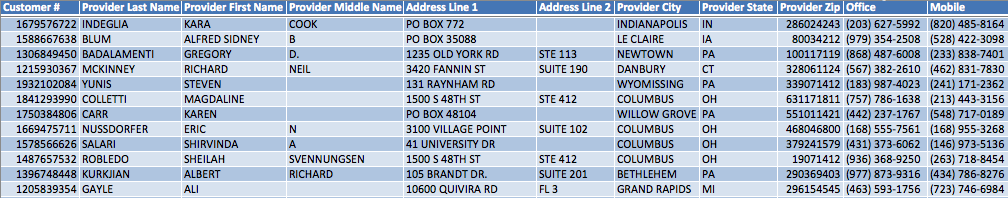

The MDM/CRM source might appear something more like:

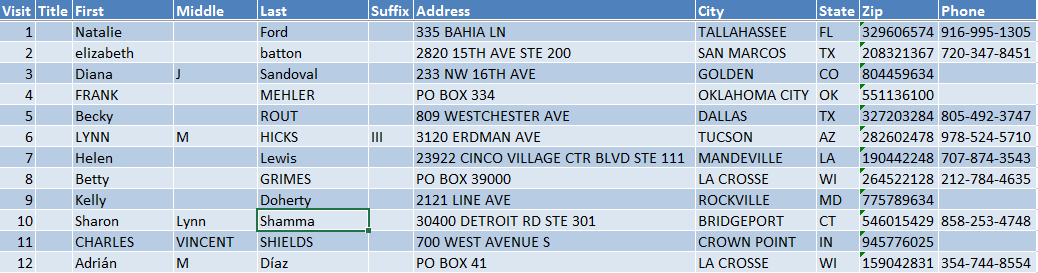

We can use Aim-Smart to parse the full name and full address into more granular components so that it aligns more directly with our master source. After doing that it might look like this:

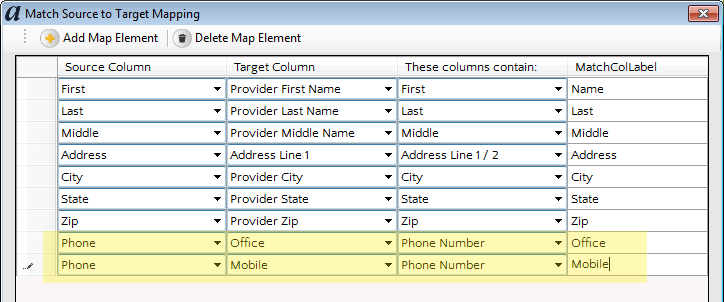

Note that while the master source had two different columns for phone number, the input source only has one. Furthermore, we don’t know if the input source is recording the office phone, the mobile phone, or perhaps a mixture of the two. Therefore, let’s map the fields as shown below – doing so will allow any phone number in the input source to match either the office or the mobile phone of the master.

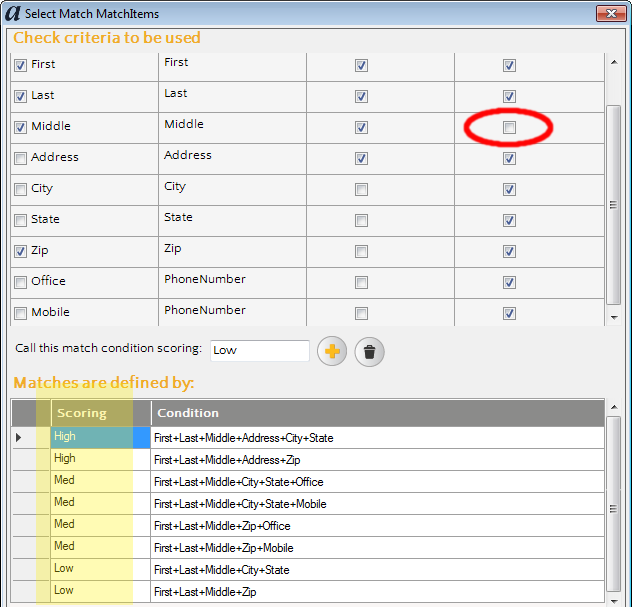

I’ve found that the best way to overcome the differences in data quality between an input/master is to create a series of match rules that overlap each other. For example, given the data in these two sources, I might create a tiering of match rules that looks something like this:

Note that the first and second “High” matches are similar. The first will search for matches using the address + zip whereas the second match will use address + city + state. Of course in theory the second rule won’t provide any new matches, I’ve found that it often does, especially in situations where a zip code is not known. The reverse is often true as well – someone may choose to specify their city as “Los Angeles” instead of “Monterey Park” because the former is easily recognized; thus the city won’t match but the zip code will.

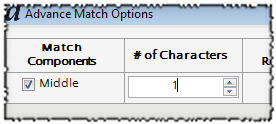

Next, I want to point out that I have disabled the “Must Exist to Match” checkbox on middle name. What this means that records that are missing a middle name in either the input or the master, will still match each other; however, if a middle name is provided in both the input and the master, and they conflict with each other, the match will be broken. Thus, Victor A. Fehlberg matches Victor Fehlberg, but Victor A. Fehlberg does not match Victor B. Fehlberg. This same logic can be used with suffixes, etc. Suppose you want John Smith to match John Smith, Jr., but that you don’t want John Smith Sr. to match John Smith Jr. – you could use this same concept. In case you’re curious, here’s what the smart options looked like for middle name. In addition to enabling the component, I used one character so that John Albert Smith would match John A Smith.

Going back to my discussion on match rules, note that the “Medium” matches are defined to be a superset of the high matches. This is really helpful so that the lower quality matches can account for further data quality degradation or differences. When might this happen? A common scenario is when a person’s address in one system is the home address yet in the second system an office address is provided. Another scenario where this happens is when a person works at two addresses, e.g. a doctor that has an office but also works at the local hospital.

With match rules that cover a wide variety of data quality differences, and the ability to conditionally match a middle name (or suffix), you’ll find increased confidence in your match results. Happy matching!

Why accurate parsing is so important

When processing and matching data,  parsing is a fundamental necessity. Any weakness in parsing accuracy or ability will become immediately evident by dramatically lowering matching accuracy. The most effective data quality software programs will emphasize and implement parsing with an extremely high level of accuracy. Microsoft Excel is very familiar to most professionals, and can be an effective and easy way to keep track of large amounts of data. Although, without a strong Excel Add-In like Aim-Smart that data is very hard to parse into usable pieces or match once the data has been parsed in to usable pieces.

parsing is a fundamental necessity. Any weakness in parsing accuracy or ability will become immediately evident by dramatically lowering matching accuracy. The most effective data quality software programs will emphasize and implement parsing with an extremely high level of accuracy. Microsoft Excel is very familiar to most professionals, and can be an effective and easy way to keep track of large amounts of data. Although, without a strong Excel Add-In like Aim-Smart that data is very hard to parse into usable pieces or match once the data has been parsed in to usable pieces.

One of the challenges when parsing using computers is identifying what each piece of data represents. The human mind does this almost automatically. As an example when matching a name you can have several parts, like “Mr. Stephen A Johnston Esq.” but they may or may not be represented in possible matches. If users were trying to match “Mr. Stephen A Johnston Esq.” There are several formats it could be listed in. Possible listings could be, “Johnston, Stephen A”, “S Aaron Johnston Esq.”, “Mr. Stephen Arron Johnston” and many more. When a computer knows what each part of the full name is (i.e. title, first name, last name, suffix) it increases effectiveness greatly when looking for matches. Things as simple as recognizing that a title may or may not be present, or which word is a last name are necessities when a user needs accurate matches. The most common and effective way around this problem is to divide an entry in to the individual parts so that a user can assign the type of data to each part. Labeling and dividing these pieces is easy for an individual user when dealing with small numbers of entries, but when dealing with large lists of data it would take far too much time. This is where a computer capable of accurate parsing is so important.

While parsing is important for data storage.  Inaccurate parsing is ultimately no help at all when users want to match their parsed data. In order to implement the best quality of parsing the best software programs use several different processes to determine what to label each piece of data. There are multiple filters data may pass through before being assigned a label. These filters can be as simple as identifying if a piece of data only contains number or being compared to very specific lists of data for a match. Looking at an address we can see this possible issues first hand. 871 Thornton Pkwy, Ste. 109 for an example. A computer will not know how each number applies to an address. Through numerous filters the parsing software identifies if a number is the street number, unit number or street name. A well-built parsing program will then return the street address divided in to accurate categories. In the case of the earlier example it would return

Inaccurate parsing is ultimately no help at all when users want to match their parsed data. In order to implement the best quality of parsing the best software programs use several different processes to determine what to label each piece of data. There are multiple filters data may pass through before being assigned a label. These filters can be as simple as identifying if a piece of data only contains number or being compared to very specific lists of data for a match. Looking at an address we can see this possible issues first hand. 871 Thornton Pkwy, Ste. 109 for an example. A computer will not know how each number applies to an address. Through numerous filters the parsing software identifies if a number is the street number, unit number or street name. A well-built parsing program will then return the street address divided in to accurate categories. In the case of the earlier example it would return

Street Number: 871

Street Name: Thornton

Street Type: Pkwy

Unit Type: Ste

Unit Number: 109

This process ensures that a piece of data is labeled correctly. From that point on the data can be stored or matched with other data, matching accuracy is dramatically improved by having data parsed correctly.

In the end, parsing is important for different reasons. This is why accurate parsing is an important foundation for dealing with any amount of data.